howmuch

A tricount like expense-sharing system written in Go

It is a personal project to learn go and relative technologies.

Project Diary

2024/09/30

The idea comes from a discussion with my mom. I was thinking about doing some personal budget management thing but she brought up the expense-sharing application that could be a good idea. I explained why it was a terrible idea and had no value but in fact it was a really a good idea.

First I have to set up a web server. I'm thinking about using gin, since I

have played with chi in other projects.

Then I have to add some basic support functions like system logging,

versioning, and other stuffs.

Next I need to design the API.

- User management: signup, login, logout.

- A logged-in user must be able to:

- create an event

- add other users to that event

- A user can only view their own events, but not the events of other users'

- A user can add an expense to the event (reason, date, who payed how much, who benefited how much)

- Users in the event can edit or delete one entry

- changes are sent to friends in the event

- User can get the money they spent themselves and the money they must pay to each other

- User can also get the total amount or the histories.

That is what I thought of for now.

Thus, Besides a web server, I must have a database that can store all the data. ex. PostgreSQL. I need a message queue system (RabbitMQ?) to handle changes for an event. That will results in a messaging service sending emails.

I also want to use Redis for cache management.

What else?

OpenAPI + swagger for API management.

And last but not least, Docker + Kubernetes for the deployment.

That is what I am thinking of for now. I will note down other ideas during the project.

2024/10/01

A Go application has 3 parts:

- Config

- Business logic

- Startup framework

Config

The application provides a command-line tool with options to load configs directly and it should also be able to read configs from the yaml/json files. And we should keep credentials in those files for the security reasons.

To do this, we can use pflag to read command line parameters, viper to

read from config files in different formats, os.Getenv to read from

environment variables and cobra for the command line

tool.

The execution of the program is then just a command like howmuch run.

Moreover, in a distributed system, configs can be stored on etcd.

Business logic

- init cache

- init DBs (Redis, SQL, Kafka, etc.)

- init web service (http, https, gRPC, etc.)

- start async tasks like

watch kube-apiserver; pull data from third-party services; store, register/metricsand listen on some port; start kafka consumer queue, etc. - Run specific business logic

- Stop the program

- others...

Startup framework

When business logic becomes complicated, we cannot spread them into a simple

main function. We need something to handle all those task, sync or async.

That is why we use cobra.

So for this project, we will use the combination of pflag, viper and

cobra.

2024/10/02

Logging

Use zap for logging system. Log will be output to stdout for dev purpose,

but it is also output to files. The log files can then be fetched to

Elasticsearch for analyzing.

Version

Add versioning into the app.

2024/10/03

Set up the web server with some necessary/nice to have middlewares.

- Recovery, Logger (already included in Default mode)

- CORS

- RequestId

Using channel and signal to gracefully shutdown the server.

A more comprehensible error code design :

- Classical HTTP code.

- Service error code composed by "PlatformError.ServiceError", e.g. "ResourceNotFound.PageNotFound"

- error message.

The service error code helps to identify the problem more precisely.

2024/10/04

Application architecture design follows Clean Architecture that has several layers:

- Entities: the models of the product

- Use cases: the core business rule

- Interface Adapters: convert data-in to entities and convert data-out to output ports.

- Frameworks and drivers: Web server, DB.

Based on this logic, we create the following directories:

model: entitiesinfra: Provides the necessary functions to setup the infrastructure, especially the DB (output-port), but also the router (input-port). Once setup, we don't touch them anymore.registry: Provides a register function for the main to register a service. It takes the pass to the output-port (ex.DBs) and gives back a pass (controller) to the input-portadapter: Controllers are one of the adapters, when they are called, they parse the user input and parse them into models and run the usecase rules. Then they send back the response(input-port). For the output-port part, therepois the implementation of interfaces defined inusecase/repo.usecase: with the input of adapter, do what have to be done, and answer with the result. In the meantime, we may have to store things into DBs. Here we use the Repository model to decouple the implementation of the repo with the interface. Thus inusecase/repowe only define interfaces.

Then it comes the real design for the app.

Following the Agile method, I don't try to define the entire project at the beginning but step by step, starting at the user part.

type User struct {

CreatedAt time.Time

UpdatedAt time.Time

FirstName string

LastName string

Email string

Password string

ID int

}

Use Buffalo pop Soda CLI to create database migrations.

2024/10/06

Implement the architecture design for User entity.

Checked out OpenAPI, and found that it was not that simple at all. It needs a whole package of knowledge about the web development!

For the test-driven part,

- model layer: just model designs, nothing to test

- infra: routes and db connections, it works when it works. Nothing to test.

- registry: Just return some structs, no logic. Not worth testing

- adapter:

- input-port (controller) test: it is about testing parsing the input value, and the output results writing. The unit test of controller is to make sure that they behave as defined in the API documentation. To test, we have to mock the business service.

- output-port (repo) test: it is about testing converting business model to database model and the interaction with the database. If we are going to test them, it's about simulating different type of database behaviour (success, timeout, etc.). To test, we have to mock the database connection.

- usecase: This is the core part to test, it's about the core business. We provide the data input and we check the data output in a fake repository.

With this design, although it may seem overkill for this little project, fits perfectly well with the TDD method.

Concretely, I will do the TDD for my usecase level development, and for the rest, I just put unit tests aside for later.

Workflow

- OAS Definition

- (Integration/Validation test)

- Usecase unit test cases

- Usecase development

- Refactor (2-3-4)

- Input-port/Output-port

That should be the correct workflow. But to save time, I will cut off the integration test part (the 2nd point).

2024/10/07

I rethought about the whole API design (even though I have only one yet). I

have created /signup and /login without thinking too much, but in fact

it is not quite RESTful.

REST is all about resources. While /signup and /login is quite

comprehensible, thus service-oriented, they don't follow the REST philosophy,

that is to say, resource-oriented.

If we rethink about /signup, what it does is to create a resource of User.

Thus, for a backend API, it'd better be named as User.Create. But what

about /login, it doesn't do anything about User. It would be strange to

declare it as a User-relevant method.

Instead, what /login really does, is to create a session.

In consequence, we have to create a new struct Session that can be created,

deleted, or updated.

It might seem overkill, and in real life, even in the official Pet store example of OpenAPI, signup and login are under /user. But it just opened my mind and forces me to think and design RESTfully!

That being said, for the user side, we shall still have /signup and /login,

because on the Front-end, we must be user-centered. We can even make this

2 functions on the same page with the same endpoint /login. The user enter

the email and the password, then clicks on Login or Signup. If the login

is successful, then he is logged in. Otherwise, if the user doesn't exist

yet, we open up 2 more inputs (first name and last name) for signup. They

can just provide the extra information and click again on Signup.

That, again, being said, I am thinking about doing some Front-end stuff just to make the validation tests of the product simpler.

The choice of the front end framework

I have considered several choices.

If I didn't purposely make the backend code to provide a REST API, I might

choose server-side-rendering with templ + htmx, or even template+vanilla javascript.

I can still write a rather static Go-frontend-server to serve HTMLs and call my Go backend. And it might be a good idea if they communicate on Go native rpc. It worth a try.

And I have moved on to Svelte which seems very simple by design and the

whole compile thing makes it really charm. But this is mainly a Go project,

to learn something new with a rather small community means potentially more

investment. I can learn it later.

Among Angular, React and Vue, I prefer Vue, for several reasons.

First, Angular is clearly overkill for this small demo project. Second,

React is good but I personally like the way of Vue doing things. And I

work with Vue at work, so I might have more technical help from my colleagues.

So the plan for this week is to have both the Front end part and Backend part working, just for user signup and login.

I would like to directly put this stuff on a CI-pipeline for tests and deployment, even I have barely nothing yet. It is always good to do this preparation stuff at the early stage of the project. So we can benefit from them all the way along.

Moreover, even I am not really finishing the project, it can still be something representable that I can show to a future interviewer.

2024/10/08

Gitea action setup ! 🎉🎉🎉

Next step is to run some check and build and test!

2024/10/09

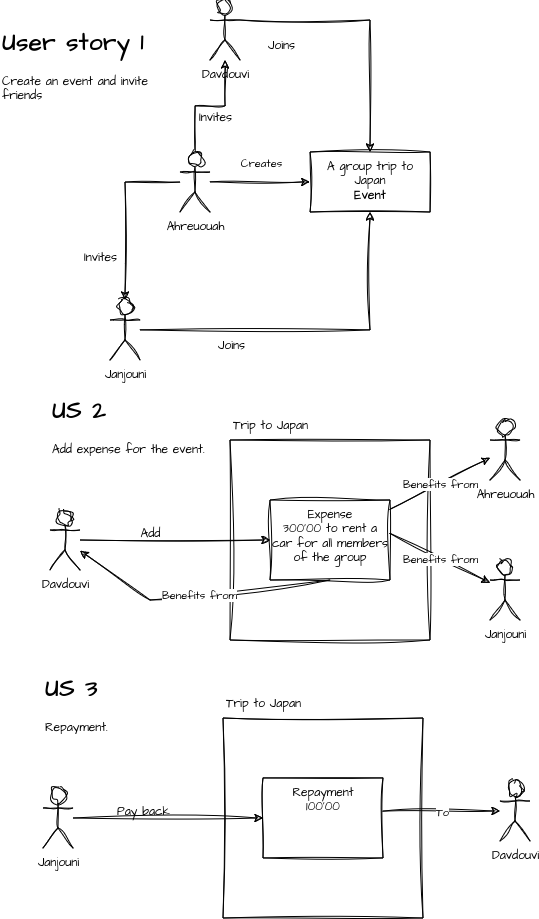

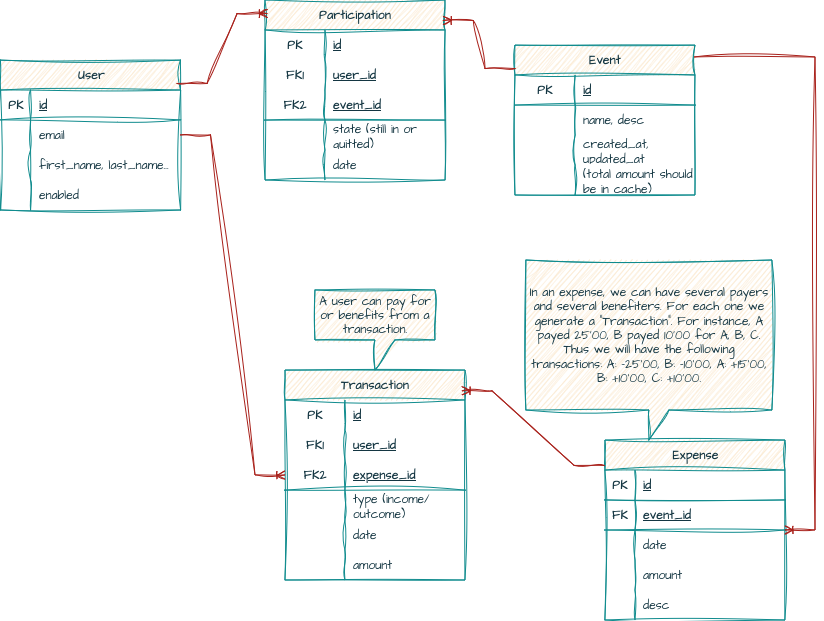

No code for today neither. But I did some design for the user story and the database model design.

2024/10/11

I spent 2 days learning some basic of Vue. Learning Vue takes time. There are a lot of concepts and it needs a lot of practice. Even though I may not need a professional level web page, I don't want to copy one module from this blog and another one from another tutorial. I might just put aside the front-end for now and concentrate on my backend Go app.

For now, I will just test my backend with curl.

And today's job is to get the login part done!

2024/10/13

Finally it took more than just one night for me to figure out the JWT.

The JWT token is simple because it doesn't need to be stored to and fetched from a database. But there is no way to revoke it instead of waiting for the expiry date.

To do so, we still have to use a database. We can store a logged out user's jti into Redis, and each time we log in, look up the cache to find if the user is logged out. And set the cache's timeout to the expiry time of the token, so that it is removed automatically.

It'd better to inject the dependency of Redis connection into the Authn

middleware so that it's simpler to test.

2024/10/15

Redis is integrated to keep a blacklist of logged out users. BTW memcached

is also interesting. In case later I want to switch to another key-value

storage, I have made an interface. It also helps for the test. I can even

just drop the redis and use a bare-hand native hashmap.

Quite a lot benefits. And then I realised that I have done "wrong" about

sqlc. I shouldn't have used the pgx driver, instead the database/sql

driver is more universal, if I want to switch to sqlite or mysql later.

Well it's not about changing the technical solution every 3 days, but a system than can survive those changes elegantly must be a robust system, with functionalities well decoupled and interfaces well defined.

I will add some tests for existing code and then it's time to move on to my core business logic.

2024/10/16

I am facing a design problem. My way to implement the business logic is to first write the core logic code in the domain service level. It will help me to identify if there are any missing part in my model design. Thus, when some of the business logic is done, I can create database migrations and then implement the adapter level's code.

The problem is that my design depends heavily on the database. Taking the example of adding an expense to en event.

Input is a valid ExpenseDTO which has the event, paiements and

receptions. What I must do is to open a database transaction where I:

- Get the Event. (Most importantly the

TotalAmount) - For each

paiemntandreceptioncreate a transaction related to theUser. And insert them into the database. - Update the

TotalAmount - Update the caches if any

If any step fails, the transaction rolls back.

This has barely no logic at all. I think it is not suitable to try to tie this operation to the domain model.

However, there is something that worth a domain model level method, that is to calculate the share of each members of the event, where we will have the list of members and the amount of balance they have. And then we will do the calculate and send back a list of money one should pay for another.

Finally, I think the business logic is still too simple to be put into a "Domain". For now, the service layer is just enough.

2024/10/17

The following basic use cases are to be implemented at the first time.

- A user signs up

- A user logs in

- A user lists their events (pagination)

- A user sees the detail of an event (description, members, amount)

- A user sees the expenses of an event (total amount, personal expenses, pagination)

- A user sees the detail of an expense: (time, amount, payers, recipients)

- A user adds an expense

- A user updates/changes an expense (may handle some extra access control)

- A user can pay the debt to other members (just a special case of expense)

- A user creates an event (and participate to it)

- A user updates the event info

- A user invites another user by sending a mail with a token.

- A user joins an event by accepting an invitation

- A user cannot see other user's information

- A user cannot see the events that they didn't participated in.

For the second stage:

- A user can archive an event

- A user deletes an expense (may handle some extra access control)

- A user restore a deleted expense

- Audit log for expense updates/deletes

A user quits an event (they cannot actually, but we can make as if they quitted)No we can't quit!

With those functionalities, there will be an usable product. And then we can work on other aspects. For example:

- introduce an admin to handle users.

- user info updates

- deleting user

- More user related contents

- Event related contents

- ex. Trip journal...

Stop dreaming... Just do the simple stuff first!

2024/10/18

I spent some time to figure out this one! But I don't actually need it for now. So I just keep it here:

SELECT

e.id,

e.name,

e.description,

e.created_at,

json_build_object(

'id', o.id,

'first_name', o.first_name,

'last_name', o.last_name

) AS owner,

json_agg(

json_build_object(

'id', u.id,

'first_name', u.first_name,

'last_name', u.last_name

)

) AS users -- Aggregation for users in the event

FROM "event" e

JOIN "participation" p ON p.event_id = e.id -- participation linked with the event

JOIN "user" u ON u.id = p.user_id -- and the query user

JOIN "user" o ON o.id = e.owner_id -- get the owner info

WHERE e.id IN (

SELECT pt.event_id FROM participation pt WHERE pt.user_id = $1

-- consider the events participated by user_id

)

GROUP BY

e.id, e.name, e.description, e.created_at,

o.id, o.first_name, o.last_name;

2024/10/19

I don't plan to handle deletions at this first stage, but I note down what I have thought of.

- Just delete. But keep a replica at the front end of the object that we are deleting. And propose an option to restore (so a new record is added to the DB)

- Just delete, but wait. The request is sent to a queue with a timeout of several seconds, if the user regrets, they can cancel the request. This can be done on the front, but also on the back. I think it is better to do in on the front-end.

- Never deletes. But keep a state in the DB

deleted. They will just be ignored when counting. - Deletes when doing database cleanup. They lines deleted will be processed when we cleanup the DB. And they will be definitely deleted at that time.

I can create a audit log table to log all the critical

changes in my expense table (update or delete).

Finished with the basic SQL commands. Learned a lot from SQL about JOIN,

aggregation and CTE. SQL itself has quite amount of things to learn, this

is on my future learning plan!

I found it quite interesting that simply with SQL, we can simulate the most business logic. It is a must-have competence for software design and development.

2024/10/20

I was thinking that I should write test for sqlc generated code. And then

I found out gomock and see how it is done in the project of

techschoo/simplebank. It's a great tutorial project. It makes me questioning

my own project's structure. It seems overwhelmed at least at the repo level.

I don't actually use the sqlc generated object, instead I do a conversion to

my Retrieved objects. But with some advanced configuration we could make the

output of sqlc object directly usable. That will save a lot of code.

The problem I saw here is the dependency on sqlc/models, and the model

designed there has no business logic. Everything is done in the handlers

and the handlers query directly the DB.

More concretely, sqlc generates RawJSON for some fields that are embedded

structs. So I have to do the translation somewhere.

So I will just stick to the plan and keep going with the predefined structure.

I have to figure out how to use the generated mock files.

The goals for the next week is to finish the basic operations for each level

and run some integration tests with curl.